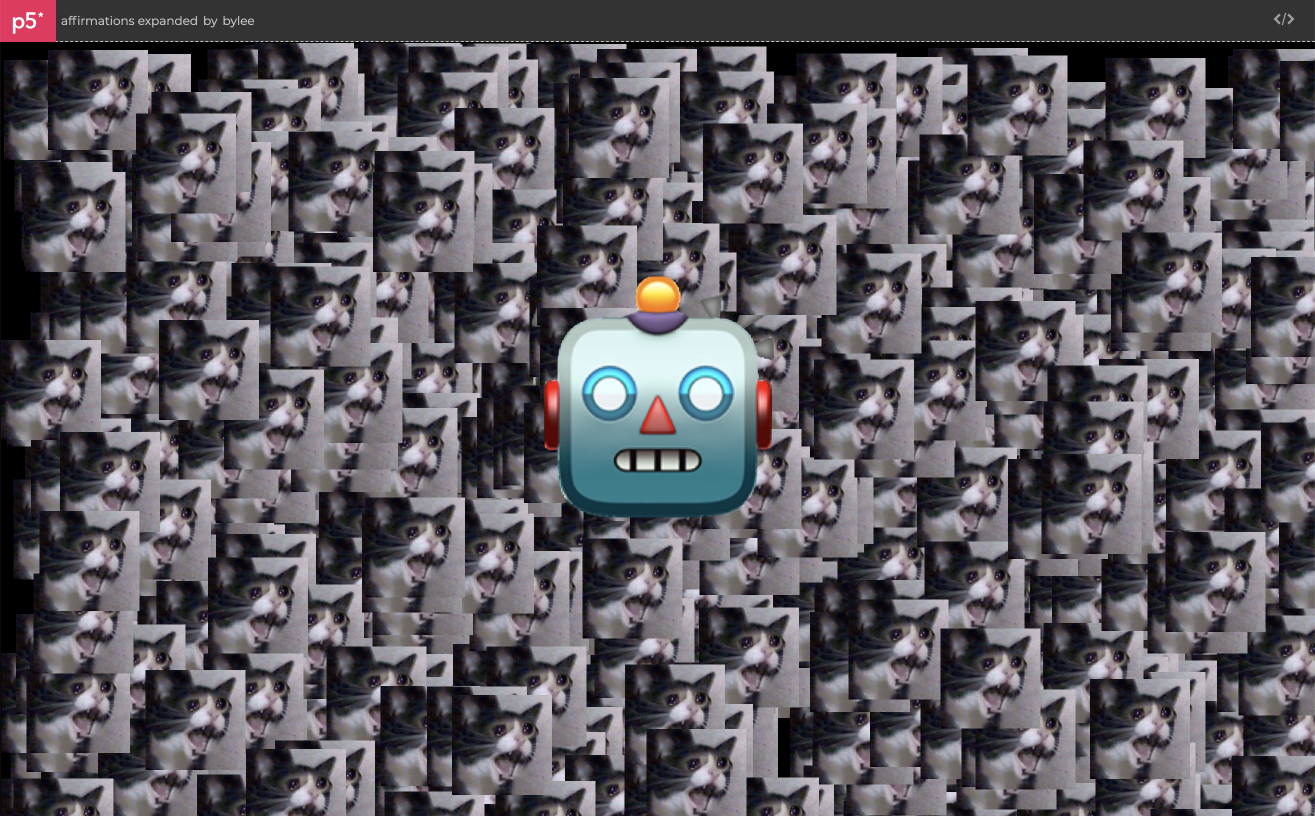

This is a birthday party simulator for celebrating a friend’s birthday in quarantine. I made it in Jonathan Hanahan’s Conditional Design Spring 2020 class using Google’s Teachable Machine, glitch, and p5.js base code from The Coding Train.

Project Brief

Challenge: During the pandemic, Zoom has become a proxy for social interaction. We are also all sick of being on Zoom so let’s do something to make it more fun!

Goals: Create a web project using Teachable Machine, Google’s machine learning tool

Solution: An interactive website for celebrating birthdays virtually, using p5.js and Teachable Machine

Timeline: March 2020 to April 2021

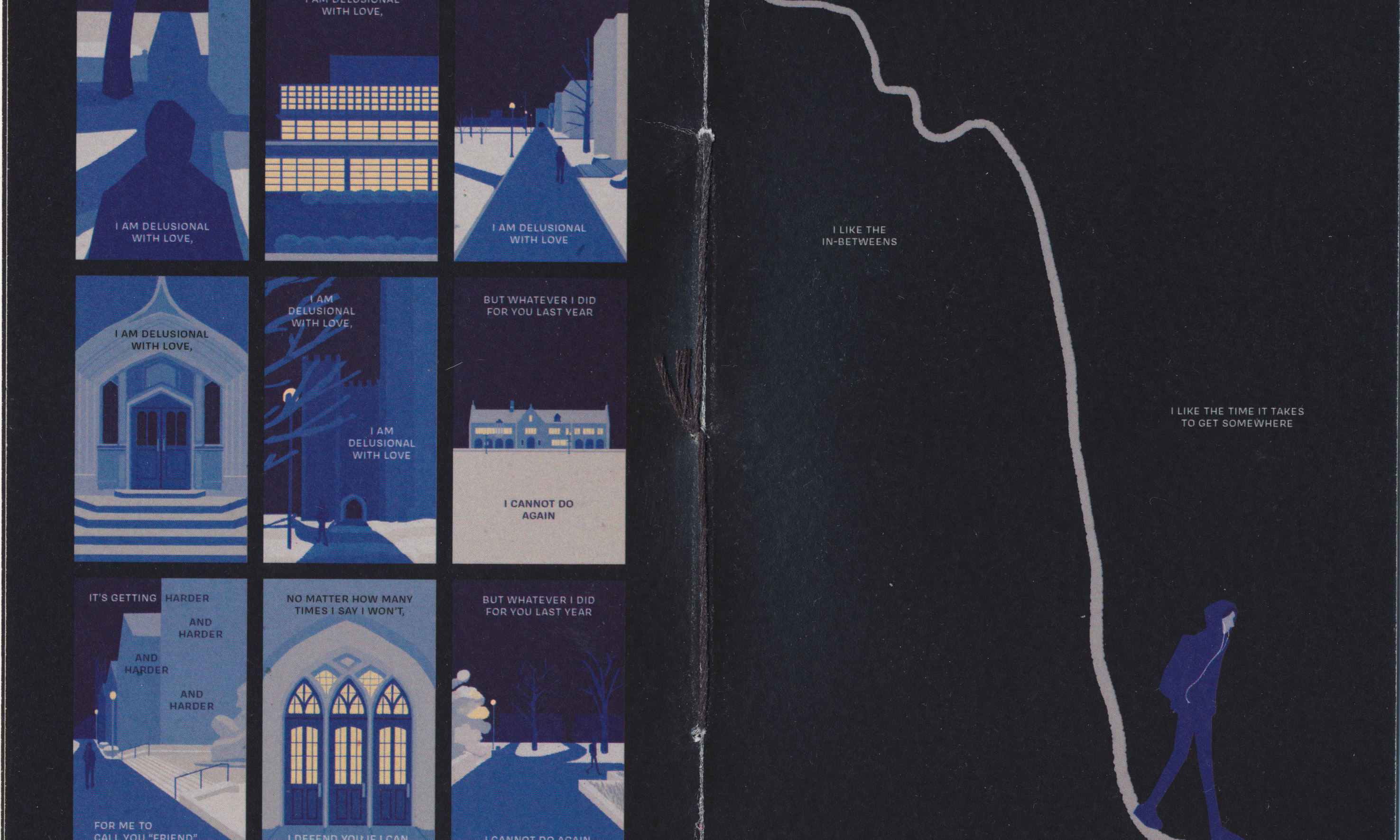

I developed a way for friends to connect in new and exciting ways during the COVID-19 pandemic. Many of my friends celebrated their birthdays in quarantine (myself included), and were missing being with friends in person. My small project serves as an aid to friends on a video call celebrating a birthday. It responds to vocal cues, reacting when someone says “birthday,” “cake,” “21,” “rat,” “taurus,” “color,” and clapping sounds. The pandemic has been a very lonely time for many of us, so I wanted to alleviate that a little by making something friends can bond over and have fun with.

Instructions: Speak and things will appear. Start a group call, share your screen, and go wild. Or just play with it yourself!

From the beginning, I knew I wanted to create something collaborative that friends could do together. Never having used it before, I explored all three modes of Google’s Teachable Machine: images, sounds, and body poses, as well as further explorations from The Coding Train such as the snake game. I considered simple multiplayer minigames that friends could play together in real time, such as:

A minigame in which each player is represented by a dot. Point finger/thumb in directions to move (teachable arcade game, teachable snake). Instructions for users to go to [x] quadrant or [x] place on grid before time is up. Or: have interesting discussion questions in which players need to pick a side. Time for users to explain their reasonings. Can be lighthearted: dog or cat? Bird or cow? Or more discussion-based, like a virtual step in/step out circle for community building. Statements like: “I am a dog person,” “I am a morning person,” “I want this pandemic to be over,” “I miss my friends,” “I identify as [x],” “I play Animal Crossing,” or “I don’t have a Switch.”

Many of my peers brought in similarly complex ideas, but Hanahan steered us away from that direction because it was outside of the scope of the project to execute something so complex. After that, I began focusing on the part of the prompt to create something people can use during quarantine to share a connection with others. I thought of all the Zoom calls I was participating in due to my classes being moved online, and wanted to see if I could make the Zoom experience more enjoyable and less stilted.

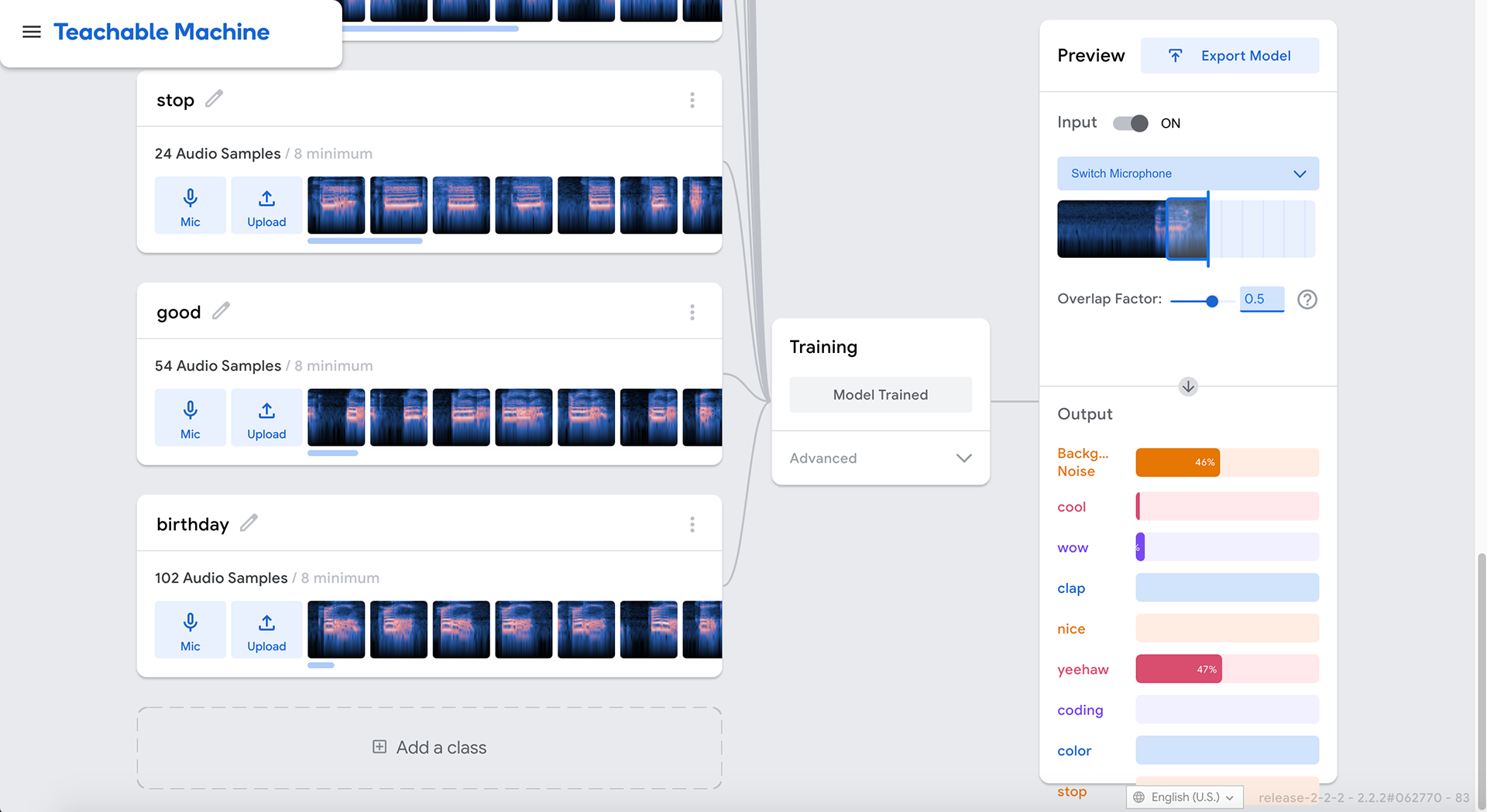

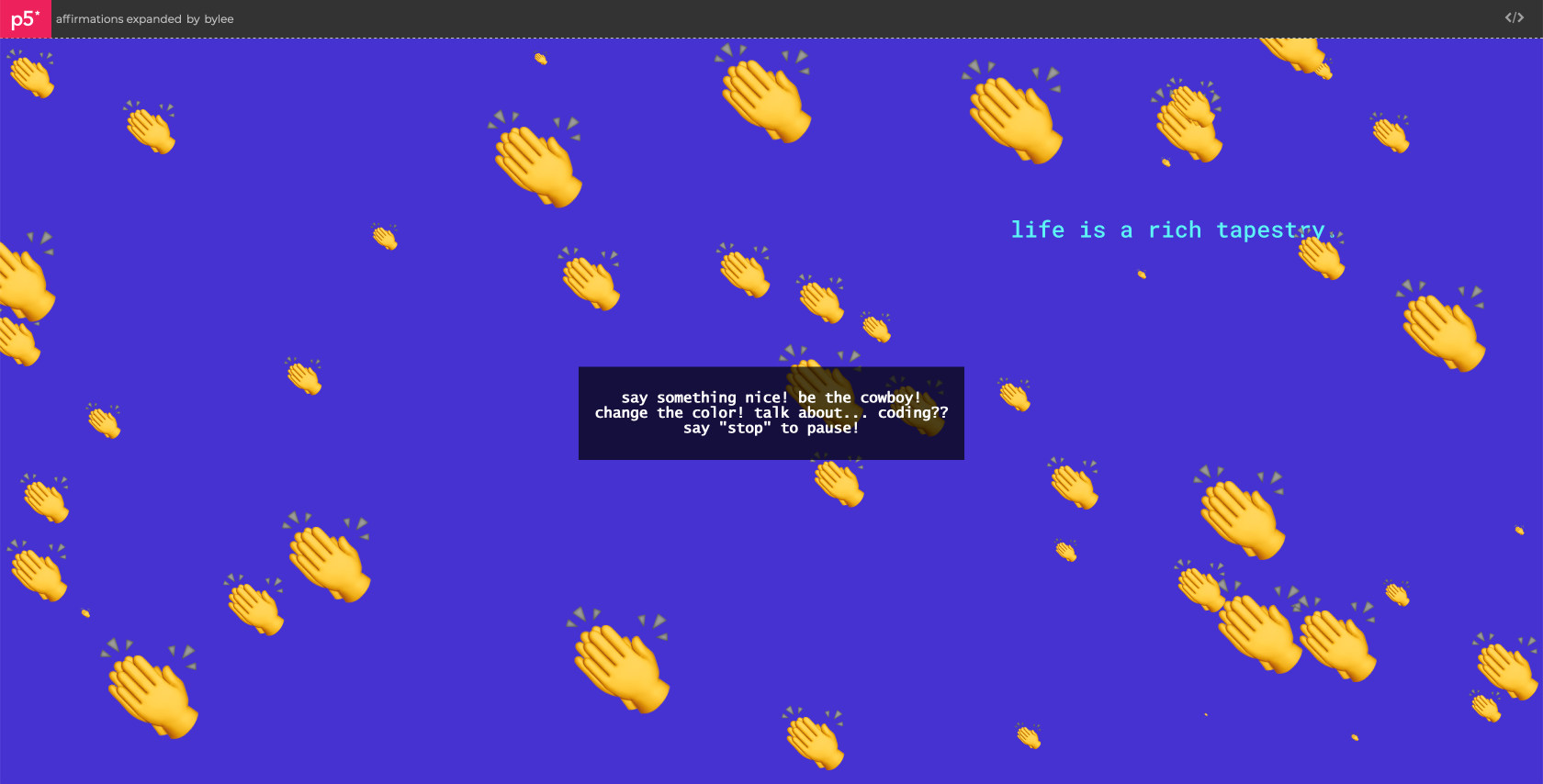

My next idea, which evolved into my final product, was to create something that reacted to people saying “affirmations” in a Zoom call, such as “wow!” “cool!” “nice!” and other positive interactions. I noticed that Zoom has a feature to react with a clapping emoji or a thumbs-up emoji, but I felt those were somewhat limited. I began to train a Teachable Machine project to recognize different audio cues including background noise, “cool,” “wow,” “yeehaw,” and clapping noises.

Note: Teachable Machine will also “recognize” other words you didn’t train it for. It will recognize words that sound like your word cues, words that don’t sound like your word cues, and practically anything that’s not a background noise. I recognized this early on, but saw it less of a limitation and more of an added bonus for unpredictability.

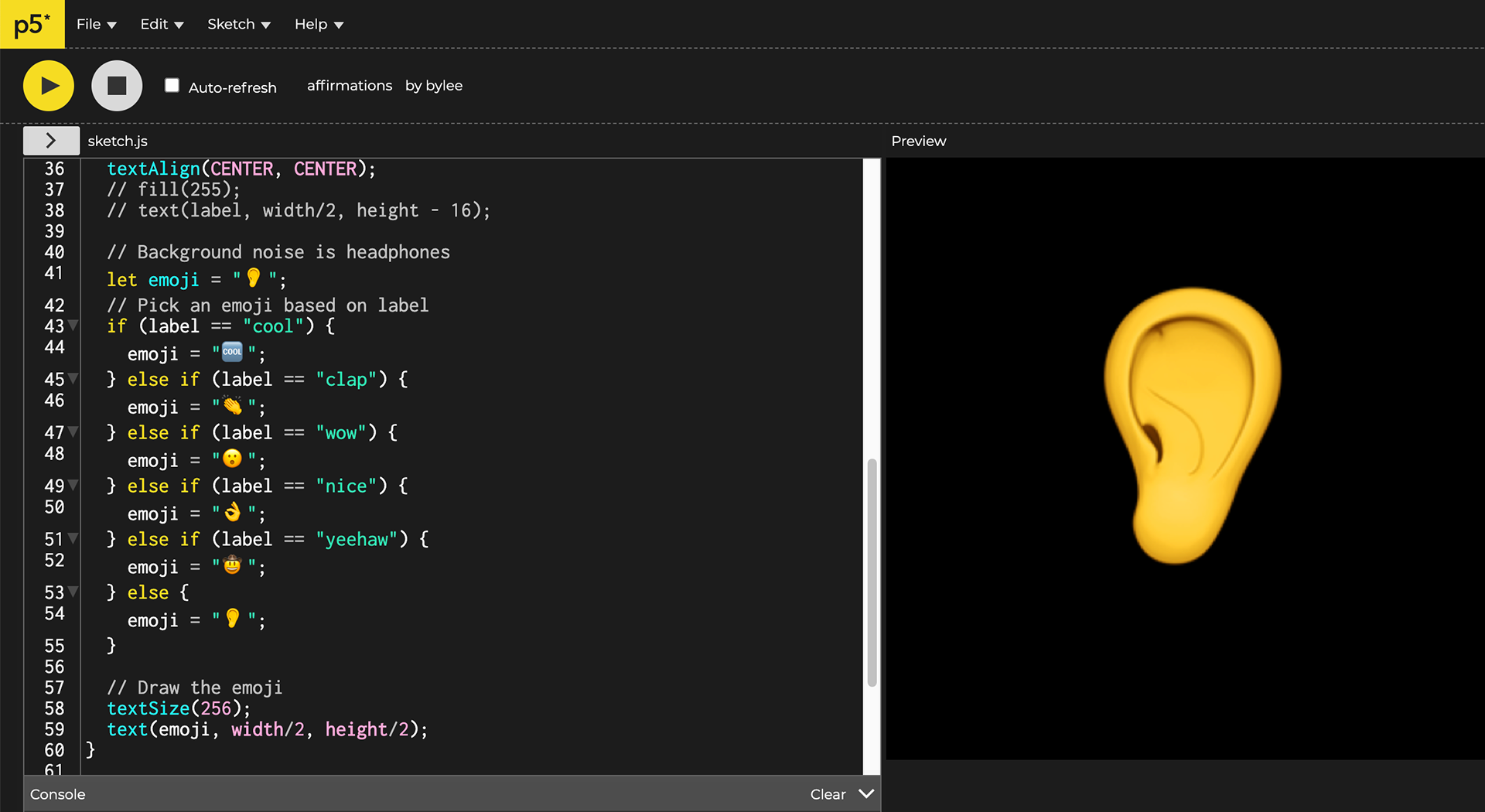

The Coding Train has a great demo on classifying audio using Teachable Machine and p5.js. This demo takes an audio input and produces a text (in the form of an emoji) output. I adapted this base code to my affirmations by plugging in my Teachable Machine project and changing up the emojis. I later realized the code was outputting emojis in the same place every few seconds (instead of, for example, storing them in an array) and wanted to see if I could use that to my advantage. I edited the code to make the emojis appear at random sizes and at random areas around the screen (within a range), which made the output a lot more interesting because it was unpredictable. I also played with outputting text like “life is a rich tapestry” instead of emojis and changing the background color.

Hanahan encouraged me to explore this direction further, but advised me to make the input (ie: what vocal cues I was training Teachable Machine on) more specific. To do so, I trained it on my classmates’ names and words like “coding” and “Teachable Machine.” But I felt this was too specific.

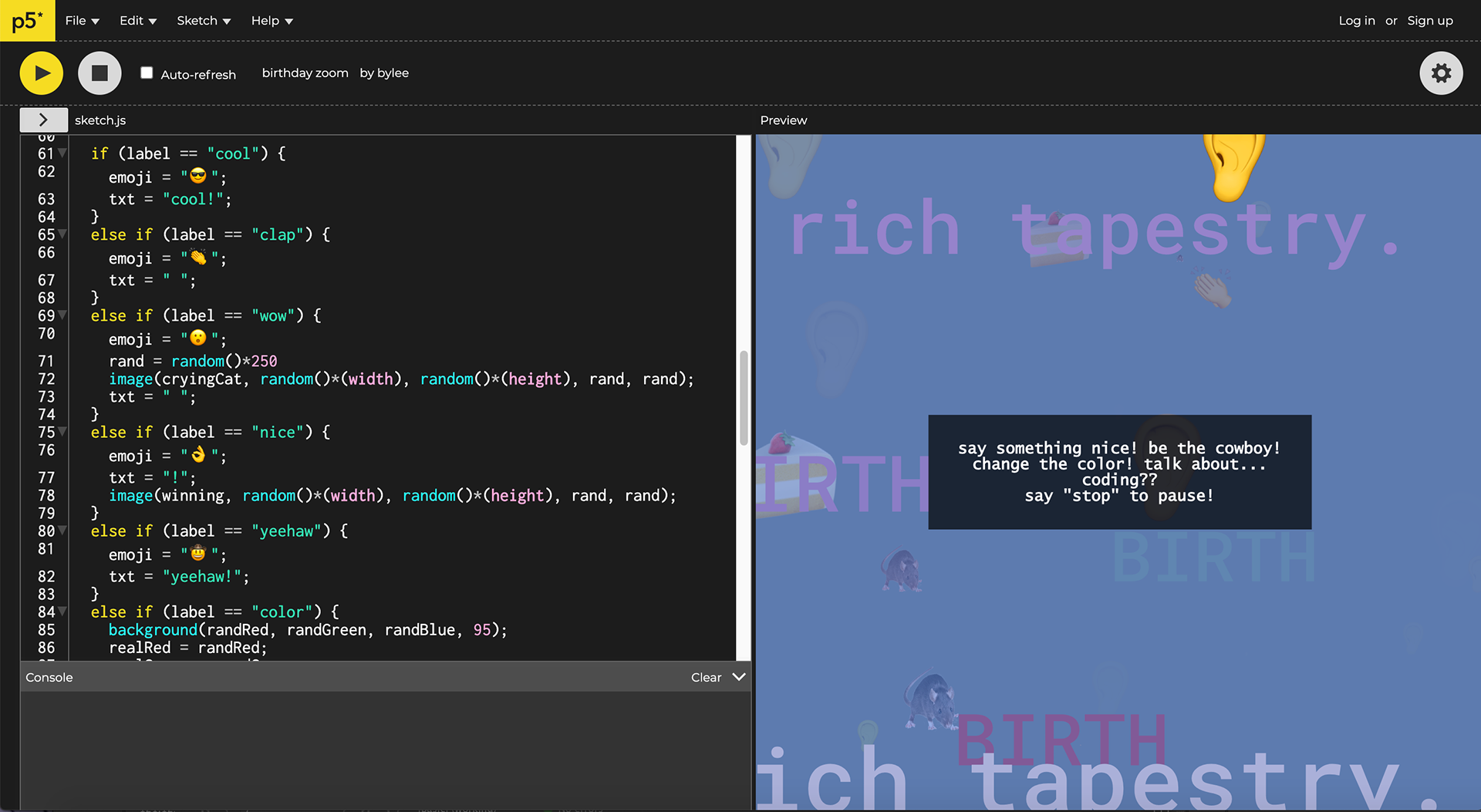

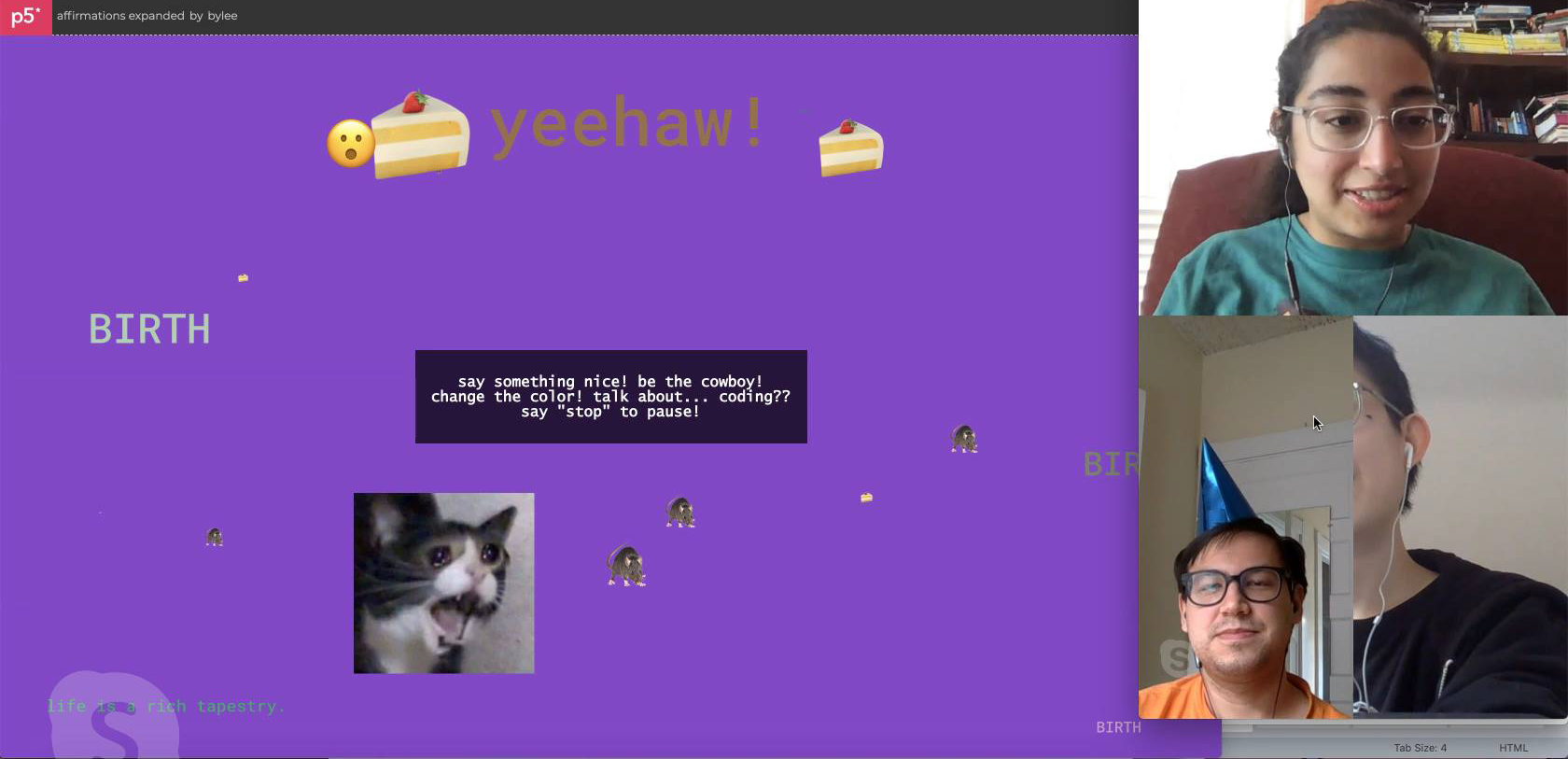

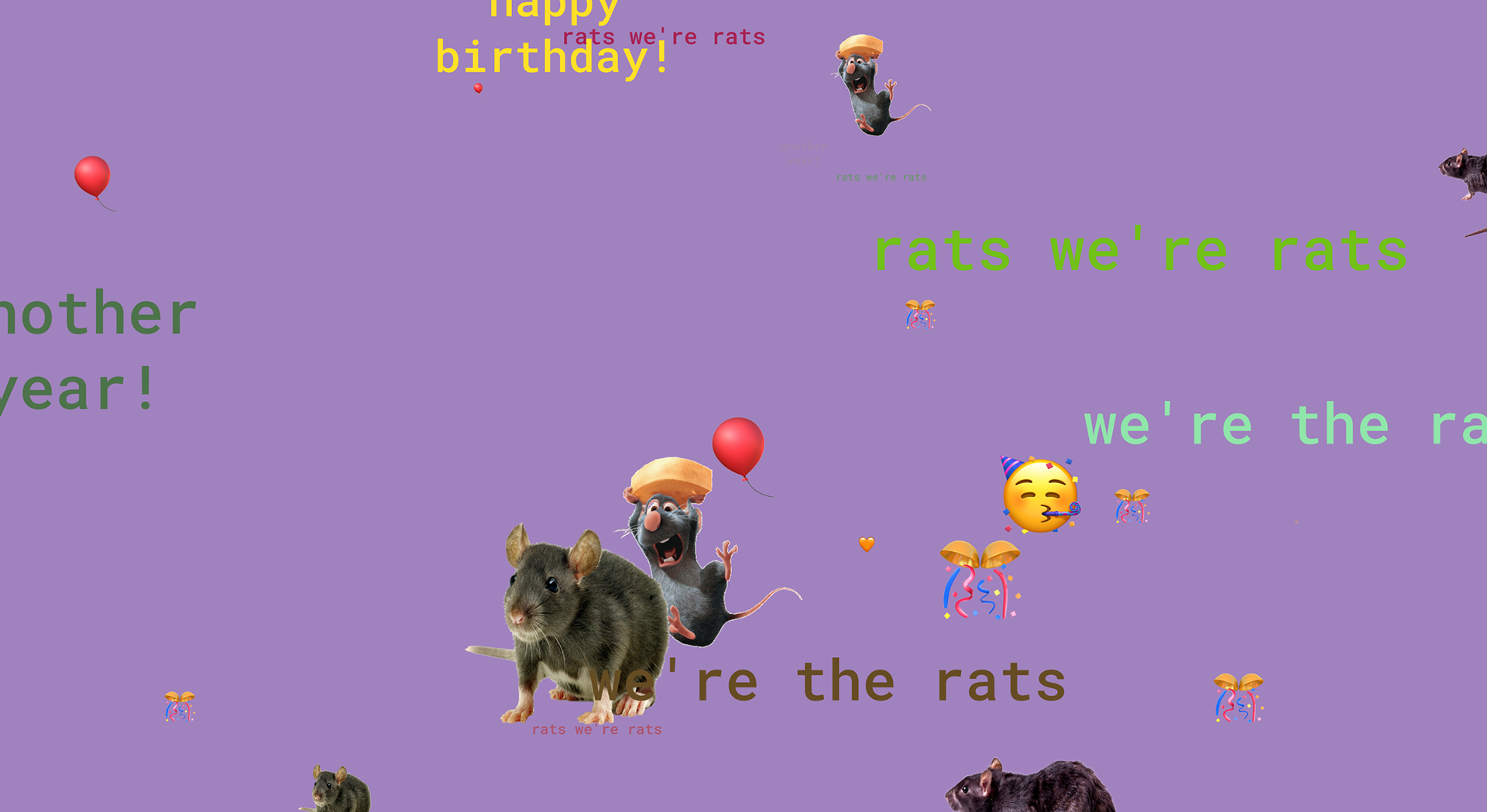

In early April, my sibling’s friends were getting ready to celebrate my sibling’s birthday on video call. I thought of my project and wanted to see if I could adapt the vocal cues to a birthday setting. I included cues like “birthday” and “rat” because my sibling was born in the Year of the Rat. The “birthday” output was emojis and text, but for the “rat” output I tried using images (of real rats, Remy from Ratatouille, and Mr. Ratburn from Arthur) instead, and enjoyed that it made the output even more unpredictable and varied. It was a hit! The four of us had fun with seeing what we could make appear.

I further refined my project by finetuning my Teachable Machine model, adding a “prompts” toolbar and instructions, and moving the code to glitch. I also added options for the user to play the birthday song (instrumental, so friends could sing along) and to save the canvas as a .PNG. Weeks later, I had a video call with my friends and my project for my own birthday. We had a lot of fun with it and even used Zoom to draw on the screen together, building upon my project’s output. Just as intended.

Overall, this was a fun experiment learning how to use a lot of tools I’d never heard of before. If I were to continue working on this, I would train Teachable Machine on more words (ideally with people other than myself), have a wider range of outputs, and put it through user testing with a broader range of users. I fully realize I didn’t create anything revolutionary, but I’m happy if this brings at least one person joy while celebrating a birthday in this pandemic.

Attribution

IBM Plex Mono designed by Mike Abbink, IBM BX&D, in collaboration with Bold Monday. I love that a monospace typeface has so many weights. I’ve developed a deep appreciation not only for Plex’s letterforms but also that IBM very intentionally made Plex open source!

Mentorship provided by Jonathan Hanahan

Valuable feedback provided by my classmates

User testing provided by my friends and family